Approach and Methodology

Data migration is a process that replaces one or more legacy systems data with a new system. Quality data is extracted from the legacy system and migrated to the new system as per the target system's data format.

DATA MIGRATION

Ganesh Mahto

There are many steps involves while migrating the data.

Planning

Project Scope and timeline

Project scope must be clearly defined while analysis of migration and estimate according to scope. Scope can be refined at a later stage of the migration. There are many aspects, like budget, timeline, and resources, at an early stage that will help for successful migration and to reduce risk. There should be multiple mock migrations that can repeat the same actions multiple times.

Migration Approach

The data migration approach should be decided while planning as per the organization's requirements.

Big Bang Migrations

Big bang migrations involve completing the entire migration in a small, defined processing window. This approach has the risk of system accessibility during the migration window. At least one dry run is required to mitigate the risk before Go Live.

Trickle Migrations

This is an incremental approach to migrating data where source and target systems can run parallel and migrate data in multiple phases. This approach also has some risks in terms of design, as it could be possible that the source and target system could undergo additional change during the migration phase, so there is a possibility of change in migration design and testing.

Assessment and Analysis

Review Source and Target system

Understanding of source and target system functionality could lead to successful data migration. Documentation, access to systems, workshops, interaction with business users can help you to understand the functionalities of systems. It will also help to analyze the data that can identify the gaps.

Data Management

Data Management analysis is an essential part of preparing data for migration that provides an overview of source and target system data. This could help to prepare standard data as per the target system with quality. Historical data is a very crucial part of any data migration that can increase the budget and complexity, although there are benefits.

Data Profiling

Date profiling is used to examine source data as per the target system, for example, data duplication, NULL value, etc. it improves data quality and reduces data quantity.

Data Volume and Quality

The volume of data must be analyzed, this can be reduced after profiling, as Maybe old data does not require migrating and can be kept as archival data for reporting purposes that could be statutory requirements, the requirement of old payslips, etc.

Data Cleansing

All data that has an issue must be resolved before migration and must be completed data impact analysis before cleansing.

Data Security

All sensitive information, including customer and personal data must have a security level that can be found during the assessment and that data must be secure while transferring to the new system as per the defined level. Data encryption could be used to encrypt data that have higher security level.

Design and Development

Once data analyzed, need to map new fields and design template as per entity (ex, Person Data etc.) and build transformation rules so that data can transform as per target system.

Mapping specifications/rules and functional documents must be discussed and signed off with the concerned authority. The template can validate the transformation/business rules that reduce the effort of loading data as well as increase the performance of the system so while designing the template, needs to be considered.

Code will be developed to extract and load data as per template and transformation rules.

Configuration will be required to fill the gap if any.

Execution and Cut Over

Before extracting and loading data, we need to identify the sequence of data load, and accordingly, data must be extracted and loaded in small segments or entity-wise. Each load must be verified against the mapping specification and rules. This exercise must be conducted in different phases (Mock migration) that help reduce error and increase data quality. In later phases, Business users should be involved in data governance for tracking and reporting data quality.

UAT and Data Reconciliation

This phase should include business rules, applications, system performance, data quality, and integration tests. Data should be reconciled and must be approved by the business before proceeding.

Solution Design

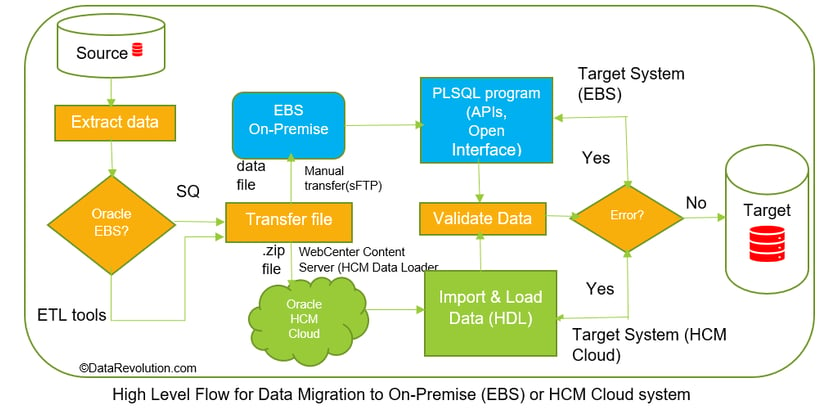

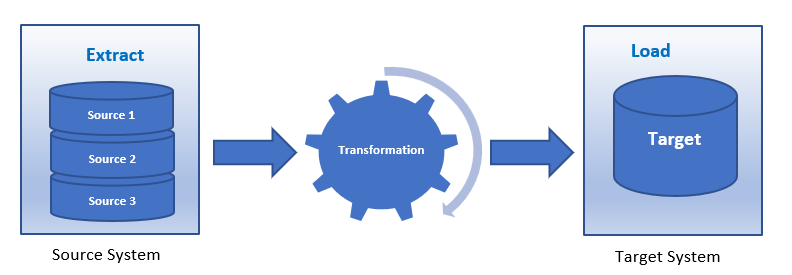

Data must be extract from source, transform and load into target system

Data is extracted from the source system into the file system in a defined format; it could be either a full extraction or a partial one. The data should be extracted as per the mapping and template that describe the relationship between the source and target systems. It is ensuring data is extracted as per the target system data structure that has different data format and content compared to the source system.

There are many ETL tools available as per the source and target system that need to require integration with the source and target system to extract and load data. Custom ETL tools can also be built using Oracle tools for Oracle on-premises or Oracle Cloud application data migration.

Data migration can be done either on-premises or in the cloud; both have different architectures, so while solution designing, architecture must be considered.

There are multiple ways to transfer data files from source to target, such as sFTP (secure file transfer protocol), web service (SOAP), etc.

In order to migrate data from source to target, the process can be manual or automated.

DISCLAIMER

This website is a personal/team endeavor to provide information to the Oracle community and others. The opinion expressed by any member in this Weblog is entirely by individual team members and does not reflect the position of my or a member’s employer, Oracle, or any other organization. This website is for informational purposes only. Examples are given based on test data.

Resources

Support

info@datavolution.cloud

+1234567890

© 2025 DataRevolution.cloud. All rights reserved.